Automation is a difficult but essential strategy for business managers in the energy sector. Indeed, capital-intensive industries like utilities or oil and gas see automation as an effective method for improving efficiency, safety, and reliability of operations. For instance, automation has made great strides in the oil and gas industry, including:

|

These are amazing developments – and the industry will only continue to push itself further. But while recent reductions in headcount have been dramatic (currently only 5-10 crew members required on a land rig vs. more than double that amount only two years ago), the pace of labor replacement is likely to slow as the front wave of automation moves past simple, straightforward tasks.

The industry has generated enormous value by incorporating these new technologies into their operational systems; for instance, rapid reduction of headcount have been dramatic (for example, only 5-10 crew members are currently required on a land rig vs. more than double that amount only two years ago).

Unless there is a contraction in available capital, the first wave of automation is beginning to move past simple, straightforward tasks and is beginning to replace a larger portion of the oil and gas workforce. While automation is often touted as an important step toward decreasing risk, what is less discussed is the associated increases in risk. Whenever automation replaces a human worker, an "agent" who is accountable and responsible for outcomes is removed. Consequently, in the event that something goes wrong, where there used to be a person to whom the manager can point to as the source of the issue, there is now a machine. Who takes the blame if the machine fails? The manufacturer? The manager? The system designer?

The industry has generated enormous value by incorporating these new technologies into their operational systems; for instance, rapid reduction of headcount have been dramatic (for example, only 5-10 crew members are currently required on a land rig vs. more than double that amount only two years ago).

Unless there is a contraction in available capital, the first wave of automation is beginning to move past simple, straightforward tasks and is beginning to replace a larger portion of the oil and gas workforce. While automation is often touted as an important step toward decreasing risk, what is less discussed is the associated increases in risk. Whenever automation replaces a human worker, an "agent" who is accountable and responsible for outcomes is removed. Consequently, in the event that something goes wrong, where there used to be a person to whom the manager can point to as the source of the issue, there is now a machine. Who takes the blame if the machine fails? The manufacturer? The manager? The system designer?

|

This is a complex issue. That is why it is critical to establish multiple levels of redundancy in automated systems - redundant automation reduces the likelihood of failures. And yet, herein lies another problem with automation: redundancy often counteracts the original benefits of automation. Imagine the amount of waste if every automated system was fully redundant: wasted energy, wasted space, wasted CapEx. What redundant automation needs is balance – an optimal level of redundancy that reflects the risk of failure in the system.

|

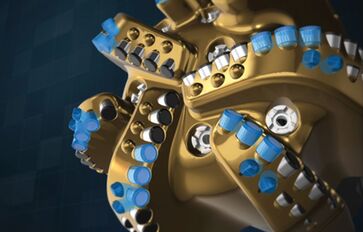

Innovating O&G drill bits once meant improving durability; today, new innovations focus on improving efficiency, self-maintenance, and productivity.

|

Calculating the correct level of redundancy to risk is complicated, primarily because it can be achieved in a variety of ways. For example, one system may have a component on standby in case of failure by another active component (note: standby can consist of human intervention or an automated take-over by another component at the moment failure is detected). In another example, two or more components can be running simultaneously and a “score” of the components is taken so that the under-performance of one component does not significantly affect the end result of the system. Moreover, a system's architecture can incorporate distinct levels of redundancy – “hot,” “warm,” and “cold” are popular descriptors. The “hotter” the redundancy, the smaller the latency in between the active system and the backup system. Hot redundancies require the most amount of duplicate hardware and energy – and thus are the costliest to maintain. Cold redundancies often mean the backup systems need to be manually turned on or even assembled – but cold redundancies are cheap. In an ideal world, hot redundancies would only be reserved for the most critical or most unreliable processes, while cold redundancies would be for systems that are the least critical or the most reliable. In reality, although automation will continue to advance, the industry will trend towards colder redundancies as long as it focuses on capital reduction and cost efficiency. There will always be a need for some level of human intervention.

|

Establishing redundancy in AI-driven systems is even more complicated. Take, for example, the process of detecting and fixing a leak. Normally humans can’t detect a leak until the leak actually happens – so there is good rationale for using AI to read, monitor, and extract patterns in leak detection. But how about taking this process to the next step? Can what is currently an alert on an operator’s dashboard be transformed into a trigger for robots to come and fix the leak? That seems like a great idea – but only if the redundancy works out. If the AI triggers a false positive, resulting in a response with large consequences, then there is the potential that a lot of money and resources are wasted. To protect against this, a backup redundancy is needed. But if a human can’t detect the trigger, then a human can’t be the redundancy. Which means a hotter redundancy is needed. If the “thought process” of the AI trigger is not well understood, it may be difficult to identify the redundancy to risk calculation and design a system with the optimal amount of redundancy.

|

Redundancy often counteracts the benefits associated with implementing automation in the first place |

Redundancy does not and will not stop the progress of industry towards automation, but innovation will slow down several conditions continue:

The most salient example of this is Boeing’s 737 Max system failure, which was sourced back to the lack of redundancy in the logic-sequence that activated the faulty MCAS flight control system. Boeing’s 737 Max system only used data from one sensor to determine the plane’s angle of attack and initiate the MCAS, leaving the design open to a faulty sensor. Furthermore, once the MCAS system was activated, it could only be shut off through a multi-step manual override. Two simple redundancies could have been built into the system but were not:

Failure to establish proper redundancy led to catastrophic consequences – and then the classic "blame game" ensued (item 4, above). Who shoulders the responsibility of this automation failure? Is it Boeing, who manufactured the plane? Is it the airline, who didn’t service the faulty sensors? Is it the pilots, who failed to manually override the failing automated system? Is it the FAA, who certified the system? The situation is as messy as it is unfortunate, and the lack of a clear, liable party is evidence that the discussion about redundancy is still in its nascence, even in an industry like the airlines that have a long history of automation.

- Capital is reluctant (aka "afraid") to invest in hardware;

- The sector does not quickly train a new type of skill-set, such as “redundancy consultants” and “redundancy insurance”;

- The sector does not adhere to clear “redundancy standards” or best-practices;

- No legal limits or caps for liability in the event of redundancy / automation failure.

The most salient example of this is Boeing’s 737 Max system failure, which was sourced back to the lack of redundancy in the logic-sequence that activated the faulty MCAS flight control system. Boeing’s 737 Max system only used data from one sensor to determine the plane’s angle of attack and initiate the MCAS, leaving the design open to a faulty sensor. Furthermore, once the MCAS system was activated, it could only be shut off through a multi-step manual override. Two simple redundancies could have been built into the system but were not:

- The use of two sensors in determining the plane’s angle of attack

- Making shutting off the MCAS system a single-step, almost instant process like grabbing the wheel (i.e. moving the backup system to a hotter redundancy).

Failure to establish proper redundancy led to catastrophic consequences – and then the classic "blame game" ensued (item 4, above). Who shoulders the responsibility of this automation failure? Is it Boeing, who manufactured the plane? Is it the airline, who didn’t service the faulty sensors? Is it the pilots, who failed to manually override the failing automated system? Is it the FAA, who certified the system? The situation is as messy as it is unfortunate, and the lack of a clear, liable party is evidence that the discussion about redundancy is still in its nascence, even in an industry like the airlines that have a long history of automation.