Bill Gates wants a miracle. And he’s willing to put his prestige and a lot of money — as much as $2 billion — behind that pursuit. He has called for a major U.S. and global effort to stimulate research in the hope of finding a “miracle” in the science and technologies of energy production.

|

Why “miracle”? The motivation for Gates’s campaign is his conviction that a global energy transformation of an enormous size and scope is needed to address the prospect of climate change. Hydrocarbons — oil, natural gas, and coal — currently supply close to 85 percent of global energy, and mainstream forecasts see world energy use nearly doubling, not shrinking, in the coming decades. Even many forecasts rooted in bullish expectations for growth in alternative energies concede that hydrocarbon use will still grow rather than shrink. No existing technologies can move us away from hydrocarbons on a global scale; there are no quick, easy solutions.

|

By “miracle,” Gates means something that seems impossible given our current technological and scientific vantage. “I’ve seen miracles happen before,” Gates says. “The personal computer. The Internet. The polio vaccine.” Indeed, the marvels that technology has enabled and the wonders that science has explained throughout modern history, and especially over the past century, spur confidence that comparable miracles remain undiscovered and uninvented. According to Gates, such miracles are the “result of research and development and the human capacity to innovate,” rather than “chance.” This is inherently a “very uncertain process,” Gates maintains, for which there is no “predictor function.” To help the process along, Gates has not only launched a persuasion campaign but also created the Breakthrough Energy Coalition, a group of wealthy individuals coordinating their investments and philanthropic giving to develop energy alternatives to hydrocarbons. In addition, he has recommended that government spending on energy research and development (R&D) be doubled or even tripled. And how should that government R&D money be spent? Rather than accelerating or seeking to scale up yesterday’s inventions, Gates says he’d “spend it all on fundamental research.”

Revolutionizing Energy...?

Revolutionizing Energy...?

In order to appreciate Gates’s stance on transforming the world’s energy systems, one need not take a position on the urgency or severity of climate change. One need only look at the record of spending on new energy technologies. By studying the pursuit of radical technological change in the energy domain, we can better understand the challenges associated with “miraculous” technological transformations in general.

From 2009 to 2014, the U.S. government spent over $150 billion in various forms of subsidies, grants, and research to support and advance “clean tech” — technologies aimed at replacing or reducing the use of hydrocarbon-based energy sources. Unsurprisingly, the production of energy from sources favored with so much federal largesse — solar, wind, and biofuels — has risen. Those three sources combined have grown from supplying just 2.5 percent of total U.S. energy consumed in 2009 to 5.1 percent in 2016. Meanwhile, over the same period, the growth in shale hydrocarbons — which did not enjoy subsidies or special federal programs — added 700 percent more energy to American production than did solar, wind, and biofuels combined. (Editor's note: shale hydrocarbons actually received enormous federal research support in the 1960s and 1970s, and continues to do so today.) The explanation for this disparity: technology has advanced faster for shale than it has for solar (or wind or biofuels, for that matter). This wasn’t the outcome many forecast a decade ago.

Consider the prospect of replacing gasoline with wind-generated electricity to charge batteries in electric cars. Here, too, there are physics-based barriers to innovation. Building a single wind turbine, taller than the Statue of Liberty, costs about the same as drilling a single shale well. The wind turbine produces a barrel-equivalent of energy every hour, while the rig produces an actual barrel every two minutes. Even though the barrel-equivalent of energy from a wind turbine costs about the same as a barrel of oil, the latter is easy and cheap to store. However, storing wind-generated electricity so that it can be used to power cars or aircraft requires batteries. So while a barrel’s worth of oil weighs just over 300 pounds and can be stored in a $40 tank, to store the equivalent amount of energy in the kind of batteries used by the Tesla car company requires several tons of batteries that would cost more than several hundred thousand dollars. Even if engineers were able to double or quadruple battery efficacy, that still would not come near to closing the performance gap between energy from wind and energy from liquid hydrocarbons for transportation.

From 2009 to 2014, the U.S. government spent over $150 billion in various forms of subsidies, grants, and research to support and advance “clean tech” — technologies aimed at replacing or reducing the use of hydrocarbon-based energy sources. Unsurprisingly, the production of energy from sources favored with so much federal largesse — solar, wind, and biofuels — has risen. Those three sources combined have grown from supplying just 2.5 percent of total U.S. energy consumed in 2009 to 5.1 percent in 2016. Meanwhile, over the same period, the growth in shale hydrocarbons — which did not enjoy subsidies or special federal programs — added 700 percent more energy to American production than did solar, wind, and biofuels combined. (Editor's note: shale hydrocarbons actually received enormous federal research support in the 1960s and 1970s, and continues to do so today.) The explanation for this disparity: technology has advanced faster for shale than it has for solar (or wind or biofuels, for that matter). This wasn’t the outcome many forecast a decade ago.

Consider the prospect of replacing gasoline with wind-generated electricity to charge batteries in electric cars. Here, too, there are physics-based barriers to innovation. Building a single wind turbine, taller than the Statue of Liberty, costs about the same as drilling a single shale well. The wind turbine produces a barrel-equivalent of energy every hour, while the rig produces an actual barrel every two minutes. Even though the barrel-equivalent of energy from a wind turbine costs about the same as a barrel of oil, the latter is easy and cheap to store. However, storing wind-generated electricity so that it can be used to power cars or aircraft requires batteries. So while a barrel’s worth of oil weighs just over 300 pounds and can be stored in a $40 tank, to store the equivalent amount of energy in the kind of batteries used by the Tesla car company requires several tons of batteries that would cost more than several hundred thousand dollars. Even if engineers were able to double or quadruple battery efficacy, that still would not come near to closing the performance gap between energy from wind and energy from liquid hydrocarbons for transportation.

|

These stark facts often elicit the response that the alternative technologies will get better with time and scale. Of course they will. But there are no significant scale benefits left, since all the underlying materials (concrete, steel, fiberglass, silicon, and corn) are already in mass production. Nor are there big gains possible in the underlying technologies given the physics we know today.

|

... alternative energy technologies will get better with time and scale. Of course they will. But there are no significant scale benefits left either.... |

From 1980 to about 2005, wind and solar tech underwent huge gains in core efficiencies that drove costs down some tenfold. But gains since 2005 have been just a fraction of what they had been historically. Expert forecasts that show even smaller incremental gains in the future are not the result of pessimism, but a recognition that wind and solar technologies are now confronting the inevitable law of diminishing returns as they approach physical limits. Wind turbines are constrained by the Betz limit, a physical principle that shows that no more than about 60 percent of air’s kinetic energy can be captured. Modern turbines can already reach 40 percent conversion efficiency. The Shockley-Queisser limit defines how much of the energy in photons can be converted into electricity by a photovoltaic cell: 34 percent. The announcement of a silicon cell with 26 percent efficiency shows that we are nearing that boundary too. While scientists are finding new non-silicon options for solar cells (such as the exotic-sounding perovskites), they offer incremental, not revolutionary, cost reductions, and all have similar physics boundaries.

The anemic progress in alternative energy technologies runs counter to what many experts, policymakers, and investors expected to happen given the massive injections of private capital and generous federal and state support. Still, we hear policymakers, investors, and pundits calling for yet more spending on engineering and development, on business stimulus and subsidies. Such proposals often use the Silicon Valley buzzword “disruption,” and draw analogies to prominent technological breakthroughs of yore — the Manhattan Project, the Apollo program, the rapid evolution of computing, the displacement of landline telephony by cellular technology. The technological achievements of the space program or even Silicon Valley may indeed appear miraculous. But do those analogies make sense with regard to the prospects for achieving the fundamental, radical transformations — the miracles — that so many seek in the energy domain, or in any other domain for that matter?

Two Fallacies of Innovation

At first blush, analogies between energy innovation and iconic technological achievements like the Manhattan Project or the Apollo program might appear not just defensible but also quite apt. The United States built the first atomic bomb, won the space race, and became the world’s indisputable powerhouse in science and technology. American scientific and technological prowess may be judged by the facts that the United States is home to the majority of the world’s leading research universities and its residents or citizens have been awarded about half of all Nobel Prizes in science, medicine, and economics.

The problem with these analogies is that they paint an incorrect, though attractive, picture of how innovation works: The government selects a practical goal and provides funding for the research, with a straight path from idea to insight, then to innovation and industrial application. Following this model, its proponents tell us, the government and its orbit of researchers and advisors not only built the atomic bomb and put a man on the moon, but also invented the Internet — from which emerged many new products and whole new industries. Why not do that again?

The anemic progress in alternative energy technologies runs counter to what many experts, policymakers, and investors expected to happen given the massive injections of private capital and generous federal and state support. Still, we hear policymakers, investors, and pundits calling for yet more spending on engineering and development, on business stimulus and subsidies. Such proposals often use the Silicon Valley buzzword “disruption,” and draw analogies to prominent technological breakthroughs of yore — the Manhattan Project, the Apollo program, the rapid evolution of computing, the displacement of landline telephony by cellular technology. The technological achievements of the space program or even Silicon Valley may indeed appear miraculous. But do those analogies make sense with regard to the prospects for achieving the fundamental, radical transformations — the miracles — that so many seek in the energy domain, or in any other domain for that matter?

Two Fallacies of Innovation

At first blush, analogies between energy innovation and iconic technological achievements like the Manhattan Project or the Apollo program might appear not just defensible but also quite apt. The United States built the first atomic bomb, won the space race, and became the world’s indisputable powerhouse in science and technology. American scientific and technological prowess may be judged by the facts that the United States is home to the majority of the world’s leading research universities and its residents or citizens have been awarded about half of all Nobel Prizes in science, medicine, and economics.

The problem with these analogies is that they paint an incorrect, though attractive, picture of how innovation works: The government selects a practical goal and provides funding for the research, with a straight path from idea to insight, then to innovation and industrial application. Following this model, its proponents tell us, the government and its orbit of researchers and advisors not only built the atomic bomb and put a man on the moon, but also invented the Internet — from which emerged many new products and whole new industries. Why not do that again?

But this picture is flawed, in at least two ways. First, it suffers from what we might call the moonshot fallacy, which goes like this: “If we can put a man on the moon, surely we can [fill in the blank with any aspirational goal].” It is true that engineers have achieved amazing feats when tasked with particular, practical goals. But not all goals are equally achievable. Bill Gates’s hoped-for miracle of transforming the global energy economy is not like putting a few people on the moon a few times; it is more like putting everybody on the moon — permanently. The former was a one-time engineering feat; the latter would require an array of new technologies to be invented and then integrated into the world economy at every level. Most of our present challenges, from curing disease to feeding or transporting billions of people, are also fundamentally unlike the discrete engineering challenge posed by the race to the moon. Engineers are capable of performing remarkable feats once or a few times, especially if (as was more or less the case with the Manhattan Project and the Apollo program) cost is no object. But scales matter — especially in physics. It is not just more challenging but qualitatively different to engineer devices or systems that are both effective and affordable at a global scale.

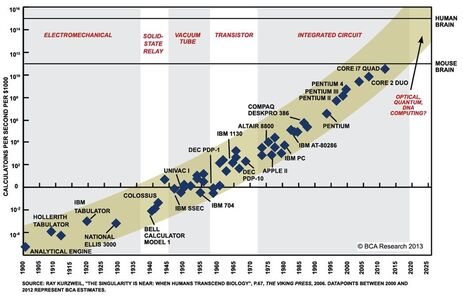

The linear model of innovation also suffers from something harder to anticipate. Call it the Moore’s Law fallacy. First proposed in 1965 by Gordon Moore, who would later go on to co-found Intel, Moore’s Law is a prediction that the number of transistors fabricated on a single silicon microchip doubles every two years (commensurately dragging down the cost of computing). It has come to epitomize the relentless and astonishing gains in computing power (and cost-effectiveness) characteristic of modern information technology. More and more information can be stored and transported at ever-smaller scales, using profoundly fewer atoms and less energy per unit of data. On top of this, software engineers use clever mathematical codes — themselves enabled by increasingly powerful computing — to parse, slice, and shrink information itself, compressing it without loss of integrity. The combination is profound. Compared to the dawn of modern computing, today’s information hardware consumes over 100 million times less energy per logic operation, while working in a physical space more than one million times smaller. A single smartphone is thousands of times more powerful than a room-sized IBM mainframe from the 1970s.

The linear model of innovation also suffers from something harder to anticipate. Call it the Moore’s Law fallacy. First proposed in 1965 by Gordon Moore, who would later go on to co-found Intel, Moore’s Law is a prediction that the number of transistors fabricated on a single silicon microchip doubles every two years (commensurately dragging down the cost of computing). It has come to epitomize the relentless and astonishing gains in computing power (and cost-effectiveness) characteristic of modern information technology. More and more information can be stored and transported at ever-smaller scales, using profoundly fewer atoms and less energy per unit of data. On top of this, software engineers use clever mathematical codes — themselves enabled by increasingly powerful computing — to parse, slice, and shrink information itself, compressing it without loss of integrity. The combination is profound. Compared to the dawn of modern computing, today’s information hardware consumes over 100 million times less energy per logic operation, while working in a physical space more than one million times smaller. A single smartphone is thousands of times more powerful than a room-sized IBM mainframe from the 1970s.

|

Many tech entrepreneurs, along with the politicians and pundits who are in awe of them, seem to believe that such disruptive Silicon Valley innovation is imminently achievable across nearly every domain — including and especially energy. They point to the way the Internet has disrupted big-box retail, newspapers, taxis, hotels, and more — the ‘Uberization’ of everything. When it comes to energy, digital disruptors believe that machines like car engines can follow the same tantalizing tech trajectory as computer chips. |

Underlying the Moore’s Law fallacy is a category error. The software challenge of how to store the most information in the smallest possible physical space is distinct from the hardware challenge of how to move physical objects using as little energy as possible. Different laws of physics come into play. In the world of people, cars, planes, trucks, and large-scale industrial systems — as opposed to the world of algorithms and bits — hardware tends to expand, not shrink, along with speed and carrying capacity. The energy needed to move a ton of people, heat a ton of steel or silicon, or grow a ton of food is determined by properties of nature whose boundaries are set by laws of gravity, inertia, friction, mass, and thermodynamics. If energy technology had followed a Moore’s Law trajectory, today’s car engine would have shrunk to the size of an ant while producing a thousandfold more horsepower. While it is true that engineers can build ant-sized engines, such engines produce roughly 100 billion times less power than, say, a Subaru. No amount of money or Silicon Valley magic will cause a car engine’s power, or its equivalent, to disappear into your pocket.

Moore’s Law-like improvements in energy are not just unlikely, they cannot happen given the physics we know today. This is not to say that Silicon Valley and information technology will not dramatically affect the production of energy and physical goods. On the contrary, there is enormous opportunity with information and analytics to wring far more efficiency out of our physical and energy systems. But wringing efficiency out of existing infrastructures — as valuable as that is — is akin to ‘Uberizing’ solar panels and shale rigs. It will get us more efficiency but not the kind of disruptions — the miracles — that would be analogous to discovering petroleum or nuclear fission, or the invention of the photovoltaic cell.

The Pursuit of Disruption

When genuinely miraculous technologies do emerge, they frequently come not from extensions of known science and technology but from foundational conceptual revolutions. Consider modern chemistry, which took us from alchemy all the way to combustion, weapons of war, and modern medicine; or quantum physics, including the discovery of the photoelectric effect, which earned Einstein his Nobel Prize and was important in the history of television, among other things; or electromagnetism, pioneered by Faraday and Maxwell, without which we would have no electric motors; or Turing’s mathematics, which made possible modern computer operating systems; or von Neumann’s and Morgenstern’s mathematics of game theory so widely used in business, economics, and political science.

Of course, such “miraculous” ideas do not occur in a vacuum, nor do they generate devices, products, tools, services, and companies overnight or in a straightforward, linear fashion. There is a dynamic interaction between scientific insights and the technologies, financing, engineering, as well as the standards, regulations, and policies that complement, enable, and develop them. But the point is that such foundational ideas are the stuff of true disruptions — rather than linear extensions of existing capabilities and knowledge, or the result of goal-directed research.

As it stands today, over half of all spending on basic research in the United States (59 percent) comes from the federal government and universities. (And the overall federal share is likely greater than reported since industry self-identifies research activity categories, leaving “basic” subject to definitional exaggeration.) While the United States remains the world’s foremost supporter of research, the nation is now rapidly losing its lead. At stake is not mere prestige but the erosion of the foundation of innovation, and thus the long-term weakening of the economy, not to mention the capacity to find new “miracles” in any field....

The energy miracle Bill Gates seeks may well be found as a consequence of new science or new technology arising from research programs studying questions that seem distant from energy — and indeed, distant from any practical use. It is often said that necessity is the mother of invention. But curiosity is the mother of miracles. We need both. And a preoccupation with the former will not produce more of the latter. Perhaps Bill Gates’s enduring legacy will be the revitalization of federal and philanthropic support for the curious pursuits of brilliant minds.

Moore’s Law-like improvements in energy are not just unlikely, they cannot happen given the physics we know today. This is not to say that Silicon Valley and information technology will not dramatically affect the production of energy and physical goods. On the contrary, there is enormous opportunity with information and analytics to wring far more efficiency out of our physical and energy systems. But wringing efficiency out of existing infrastructures — as valuable as that is — is akin to ‘Uberizing’ solar panels and shale rigs. It will get us more efficiency but not the kind of disruptions — the miracles — that would be analogous to discovering petroleum or nuclear fission, or the invention of the photovoltaic cell.

The Pursuit of Disruption

When genuinely miraculous technologies do emerge, they frequently come not from extensions of known science and technology but from foundational conceptual revolutions. Consider modern chemistry, which took us from alchemy all the way to combustion, weapons of war, and modern medicine; or quantum physics, including the discovery of the photoelectric effect, which earned Einstein his Nobel Prize and was important in the history of television, among other things; or electromagnetism, pioneered by Faraday and Maxwell, without which we would have no electric motors; or Turing’s mathematics, which made possible modern computer operating systems; or von Neumann’s and Morgenstern’s mathematics of game theory so widely used in business, economics, and political science.

Of course, such “miraculous” ideas do not occur in a vacuum, nor do they generate devices, products, tools, services, and companies overnight or in a straightforward, linear fashion. There is a dynamic interaction between scientific insights and the technologies, financing, engineering, as well as the standards, regulations, and policies that complement, enable, and develop them. But the point is that such foundational ideas are the stuff of true disruptions — rather than linear extensions of existing capabilities and knowledge, or the result of goal-directed research.

As it stands today, over half of all spending on basic research in the United States (59 percent) comes from the federal government and universities. (And the overall federal share is likely greater than reported since industry self-identifies research activity categories, leaving “basic” subject to definitional exaggeration.) While the United States remains the world’s foremost supporter of research, the nation is now rapidly losing its lead. At stake is not mere prestige but the erosion of the foundation of innovation, and thus the long-term weakening of the economy, not to mention the capacity to find new “miracles” in any field....

The energy miracle Bill Gates seeks may well be found as a consequence of new science or new technology arising from research programs studying questions that seem distant from energy — and indeed, distant from any practical use. It is often said that necessity is the mother of invention. But curiosity is the mother of miracles. We need both. And a preoccupation with the former will not produce more of the latter. Perhaps Bill Gates’s enduring legacy will be the revitalization of federal and philanthropic support for the curious pursuits of brilliant minds.

The original article was published in The New Atlantis.

Author Info: Mark P. Mills, a physicist, is a senior fellow at the Manhattan Institute and the CEO of a tech-centric capital advisory group.

In 2016, Mark was named “Energy Writer of the Year” by the American Energy Society.

Author Info: Mark P. Mills, a physicist, is a senior fellow at the Manhattan Institute and the CEO of a tech-centric capital advisory group.

In 2016, Mark was named “Energy Writer of the Year” by the American Energy Society.